This week on Ginger River Radio, Jiang Jiang welcomes Yihan Zhou, an AI scientist for Mind AI, a South Korean company which uses new approaches and data structures to complement Generative AI such as GPT. Now based in Shenzhen, China's Silicon Valley, Yihan shares his firsthand insights into ChatGPT and discusses with Jiang Jiang on topics including Chinese companies' efforts and challenges in closing the gap with OpenAI, the limitations of ChatGPT, tips on better utilizing GPT-like tools and prospects of GPT-5 and GPT-10.

You can also listen to Ginger River Radio on Apple Podcasts and Spotify.

Yihan Zhou, AI scientist of Mind AI

Highlights:

5:13 - What is GPT?

11:30 - Three key elements for developing ChatGPT and GPT-4

17:13 - Yihan's views on New Bing and Microsoft 365 Copilot

20:50 - Chinese companies’ efforts to close the gap: Baidu’s Ernie Bot, Huawei, etc.

33:54 - How will ChatGPT-like products update China's tech industries: Douyin, e-commerce, NEV

40:07 - Impact of Chinese government's regulation

44:35 - Mind AI's business solutions

49:44 - Yihan's views on Shenzhen and the Guangdong–Hong Kong–Macao Greater Bay Area

54:18 - Different innovation patterns in China, the U.S. and South Korea

1:00:43 - Can AI understand human language? An interesting experiment that shows the limitation of ChatGPT

1:15:44 - Suggestions on utilizing GPT-like tools

1:21:40 - Prospects of GPT-5 and GPT-10

1:29:00 - Book recommendation: Life 3.0: Being human in the age of artificial intelligence

Recommendations:

Yihan Zhou: Life 3.0: Being Human in the Age of Artificial Intelligence by Max Tegmark

A complete transcript of this podcast is available here:

JJ: Welcome to the Ginger River Radio podcast, a part of the GRR media outlet and your go-to podcast for anything about Chinese current events. I'm your host, Jiang Jiang, the founder of Ginger River Review (GRR), a newsletter that focuses on reporting the priorities of both the leadership and the general public in China and views you do not normally see from mainstream English language media. If you haven't subscribed to our newsletter, go to www.gingerriver.com and sign up to join our community of avid China-watchers. Now let's dive into our podcast show today.

On today's episode, we will be delving into the fascinating world of natural language processing (NLP) and artificial intelligence. In particular, we will be discussing the development of ChatGPT which has taken the world by storm and China's opportunities and challenges in creating similar software.

Joining me to talk about this today is Yihan Zhou, an AI scientist and linguist who is currently working at the South Korean company Mind AI in Shenzhen, China's high-tech hub. I had the pleasure of meeting Yihan over ten years ago in Shenzhen. Yihan studied geographic information science at Nanjing University before going on to study psycholinguistics and computational linguistics at the University of Illinois. In 2019, he ranked first among 54 candidates in the Young Scholar Award Competition organized by The International Association of Chinese Linguistics. As an AI scientist, Yihan and his colleagues are using new approaches and data structures to complement Generative AI such as GPT.

JJ: Hi, Yihan, welcome to Ginger River Radio!

Yihan: Hi, Jiang Jiang. It was a great pleasure to join Ginger Giver Radio. And also I have been following your Ginger River Review newsletter as well. It sounds like a tongue twister, right?

JJ: Yeah, yeah, thank you so much.

Yihan: Yeah, it has been 10 years. And I remember when we met like 10 years ago, in Shenzhen. At that time, the technology that went viral was the voice message, and then start with the Talkbox. And then somehow Tencent they develop their own product, now which is known as WeChat. It's just popular among Chinese population. And then 10 years later, we had this ChatGPT. They all share this name "chat" somehow interestingly, but I would say the GPT after the word "Chat" is the thing that makes a huge difference and that also would be the focus of our topic today.

JJ: Yeah, it's incredible how quickly technology continues to evolve. Bill Gates has likened the development of AI-powered ChatGPT to the advent of the personal computer and said that the new technology will be like having a “white-collar worker” as a personal assistant. So first of all, could you tell us what is GPT and what is GPT’s relation with AI – Artificial Intelligence?

Yihan: Yeah, sure. I think that's a very important question, because I think everyone now in the world must have heard of GPT. So maybe in the beginning, we should give a little more background information about this GPT. GPT is short for Generative Pre-trained Transformer.

It's deep learning statistical model, a language model developed by the company, OpenAI and then went viral. So I would like to explain the concept of GPT letter by letter, because every single letter means a lot.

So "G" stands for generative. This indicates GPT is a type of generative AI. It's different from the conventional, predictive AI. For example, before we can build an AI model to help us predict the price of houses or a price of a stock market, this AI will tell us there is some indication for a future phenomenon. So that's all it tells us. And then based on this prediction made by AI, we human beings continue to work on further processing like write a report or write a newsletter. But now the generative AI is actually doing everything. It not only predicts something. It also can write something. If you ask your GPT what's the price here and can you write a report? It will do that work for us. So generative is very, very different from a just purely predictive AI. It can do a lot of work that was done by human beings. So that's for the "G".

Then for the "P", it's pre-trained. Before in AI and in natural language processing, usually every time we have a task, we train a specific model. For example, if we want to do a task of detecting whether an email is a spam or not, we collect data about spam email and non-spam email and train this model. And then this model will be only used and effectively, in the application of spam detection. It wouldn't work for a spelling correction or wouldn't work like a writing a newsletter. But this pre-trained model is like a panacea. We collect a lot of data in different industries and domains, and we compile them together and train a single model, and this single model seems that it can do everything. It will correct your grammatical mistake. It can detect whether this email is spam or non-spam. That's also a huge advancement compared with the conventional models and the predictive AI.

And finally, it's the "T". It's not a new thing. It's called transformer and is actually an architecture of a deep learning model that was proposed by Google, I think, in 2017. This has become a very classical architecture for building deep learning models. But now in the GPT, somehow OpenAI combines this transformer with other types of approaches. And then so they make this GPT really really powerful that the GPT will return some answers that will be favored by human beings, not just based on some random calculation and then return the result that human beings tend not to like it or agree with it.

I think "G" plus "P" and plus "T" are the reasons that this GPT becomes so powerful and also so phenomenal and widespread.

Nowadays, I think it also indicates a huge, actually, I would say, a landmark in the AI engineering and an AI product.

JJ: We know that the company who created ChatGPT is called OpenAI, so can we see that GPT is a unique technology that owned or created by OpenAI or it is more like a general model that every company can use or develop?

Yihan: Yeah, I think it's an interesting question, depending on how you look at this. But I would agree that you can say that GPT is a unique product that is developed or owned by OpenAI, but at the same time, there are several competitors like by Google, Baidu and and other open source.

There is one, I think, called Alpaca by Stanford. This one is open source. So there are other GPT-like products that are also coming into the AI community. But I would say GPT is the first powerful and successful product that is developed by OpenAI.

JJ: I think it's a wonderful GPT 101 you have just give to me. And it's great to know that actually it is evolving. And we know that actually after OpenAI launched ChatGPT, it also launched GPT-4 months after that. What are the key elements for developing ChatGPT and GPT-4? Where are the barriers? Currently, the U.S. companies are the first to release these models and software. We are going to talk about China later. But what advantages do U.S. companies have in developing these technologies?

Yihan: Yeah, I think GPT or the ChatGPT product is a just very unique and amazing product. And I think there are three, maybe I would say at least three key elements of this product. The first one, I would say it's the computing power that OpenAI has, because the GPT model, maybe GPT-3.5 or GPT-4 model, as I think, is 170 billion parameters in the model. [Note: GPT-4 has 170 trillion parameters compared to GPT-3's 175 billion parameters] So in order to perform this complex mathematic calculation, you need a lot of computing power and chips. I think OpenAi has the support from Microsoft. They provide them with the computing power, and then the cloud server. They do have a lot of advantage in carrying out different experiments and calculations with the model. That's the first thing.

Another key element is the model, engineering, and architecture. Because, as I said, the AI company used to train a single model for a single task, so the model is very, very small and easy to control. But now, because it's a pre-training model, it aims for that I would train this one model, but it would solve every task that I wanted to solve. So now the problem becomes, how can I make a single model so comprehensive that it can cover all different kinds of task? How do I select the parameters from the data I have and train it into a well round model?

Also, to train this model, we need a lot of computing power. We will have a lot of device that working in parallel to train this model. Any company that wants to develop this product also needs to have a very good management of these resources and devices. Let's say I have 100 devices working at the same time. What if one just break down? Will that do a very huge damage to the model? Or how do I control it so that I can make these 100 devices work efficiently at the same time? So there are a lot of trial and error. There are a lot of lessons to learn from this experiment. So that's why I think OpenAI must have gained a lot of experience in making errors and making mistakes. And so that their final product is an amazing one.

And then the last one is the most important one. Because for computing power, you can actually try to buy more chips, although it's expensive. And in model engineering, you can just keep trying if you have money and then you made mistakes, and you learn from the mistake and you will improve. But the last element is something that cannot be improved just by spending money. That is the data, especially the quality of the data.

So from the paper of OpenAI we know that OpenAI's data were trained in many different domains and many different languages. But the majority of the data source is in English. It has very little in Chinese, I think 5 percent. The majority is in English. English is also an international language. So if we look at Wikipedia, English has the most articles than any other languages. And also if you just look into a specific topic on Wikipedia, from my experience, you often find the most comprehensive or the most written one in English than other languages. The English community or the American companies, they do have this advantage in harvesting this a good high quality data in English.

And also OpenAI has specifically has specifically trained a data team that they used very sophisticated and rigorous approach to make sure their data are in very, very high quality. I think it's a key secret of their success compared to other companies.

JJ: What do you think of recent product launches like New Bing, Microsoft 365 Copilot, and Google's Bard in the wake of ChatGPT's introduction? Will this area's quick technological integration and application iteration become standard practice in the future? What heights will be attained in the following year or two?

Yihan: Yeah, I think it's just really amazing. Just in the past few weeks, there are so many new products coming out. So I would like to quote Microsoft's own words: "Reinventing Productivity with AI." I think the New Bing and the Microsoft Copilot, their mission is to not just improve our productivity by AI, but they want to reinvent productivity -- We now need to rethink what are we going to do with this AI tool. They are trying to redefine what productivity means and what production means. I think this is a very, very important advancement and benefit brought by GPT and I think in the future, the GPT technology will be iterated and integrated with other tools in a very, very fast way. It's just faster than you can imagine. For example, just in the past few weeks, we have already seen that ChatGPT integrated with Office, and then ChatGPT now integrated with GitHub. You can use it to write code. And then Adobe launched GPT-like product to help you generate images.

Just a few days ago, OpenAI is now doing it a little backward. They allowed plug-ins to be added to ChatGPT. You can write some add-ons, and then integrate that add-on to GPT. For example, GPT was being criticized or made fun of its mathematical ability. It couldn't do mathematics very well. But now they have an add-on from Wolfram, which is a program for mathematic computation.

Now with that plug-in, the GPT is really good at mathematics now. For the future, I cannot make prediction in the unit of year, because the iteration is just so fast. But I would say the direction is to make GPT integrated with everything, to make GPT applied to every industry, and then to make GPT become part of our new production or part of our new productivity concept. In the future, our productivity will be defined, by the way, of how we use ChatGPT in our work.

JJ: I have a follow up question for this. How big is the gap of technology between top-tier developers and smaller companies that specialize in Artificial Intelligence or GPT-like products?

Yihan: Yeah, it's a very interesting question and important question, because I personally find it interesting because OpenAI launched their GPT, and other companies see this opportunity. And then they also think if they can do it, I also can do it. But I think some other companies are underestimating the difficulty of making a really powerful GPT product, because OpenAI just created a survival bias. Because OpenAI was so successful. that other companies think they can also be successful. But I don't think that is the case. Based on my own understanding, and also I read some articles in the AI industry. So if I would say, currently, if OpenAI's GPT has reached the level of GPT-4, then Google may reach the level of GPT-3.5. And Baidu may reach the level of GPT-3. So there are a huge gaps between OpenAI and other competitors right now, but maybe in the future, the gap would be narrowed very quickly.

But also the other day, the CEO of Baidu [Robin Li] was asked about this question, and he said, the gap between Baidu and GPT is just one month, but he added another comment to this one month. He said the gap is one month, but it's hard to estimate how much time it would take to catch up with this one-month gap. So that indicates like, even though he said it's a one-month gap, but it may mean more than one-month work to close this gap.

JJ: So it takes time. And since you mentioned Baidu, let’s talk about China. I also watched the presentation of Robin Li, the CEO of Baidu, about their newly unveiled Ernie Bot, or known as “Wenxin Yiyan” 文心一言 in Chinese, which is similar to ChatGPT. Baidu Chief Executive Robin Li said it was the result of “decades of Baidu’s hard work and efforts” and its features will be integrated into Baidu’s Xiaodu smart device ecosystem. He mentioned that it's not a perfect product, but because of the demands of of Baidu's clients and the markets they have to release that. Actually, they had an updated version every year.

And also, Huawei's CEO Ren Zhengfei said that the future of AI large-scale models will be booming, and Huawei will focus on creating AI foundational platforms. You talk about it a little bit just now. How do you view the ChatGPT-like products that has been launched and will be launched by Chinese companies? What development opportunities do you think ChatGPT has brought to Chinese enterprises? You have just talked about that. Actually, it's more than a one-month gap between Baidu and some other Chinese companies and the U.S. companies. What are the advantages and challenges of developing ChatGPT in China?

Yihan: Speaking of Baidu, I also watched the whole launch conference by Baidu. To be honest in the beginning, I was a little bit disappointed.

JJ: I think not just you. The stock market is also a little bit disappointed.

Yihan: Exactly, as you said, the stock market dropped 10 percent. And that reminds me of what happened to Google, right? When Google first present their ChatGPT-like product, the stock price also dropped like 9 percent or 10 percent. So that also goes back to my previous comment. People think because OpenAI is so successful, other people think we can also do it. But that's not the case. It's actually extremely difficult to make a very well-functioned product like OpenAI.

But on the next day, and also my opinion about Baidu's this product also changed a little bit. In the beginning I was a little bit disappointed, but now I see it may brings more opportunities to Baidu itself and also to the Chinese market because, you mentioned that the stock price drop, yes, but do you know what happened in the next day? Actually on the next day, the stock price came back, and it's even higher than before.

So I think it also reflected how the market and investors see this future of Baidu or other Chinese company who want to develop the GPT-like product. They do see that maybe in the short term, it's really a bad idea and bad product. But maybe in the long term, it means more opportunities and more competitiveness added to the company. And I know that Huawei has been developing their own cloud server. I think it kind of makes sense to me that they're not trying to build another GPT product.

JJ: Yeah, you just mentioned that cloud server is actually a key element [of ChatGPT], right? It's a barrier.

Yihan: Exactly. Because unlike Baidu, I don't think Huawei has much accumulation in a natural language processing and related data. But they do have some accumulation in computing and the cloud server. So it makes sense to me that they are focusing on the computing platform, instead of the GPT model or GPT product itself. And not only just Baidu and Huawei, the whole Chinese market and the companies get excited when they see how powerful ChatGPT is.

Based on my experience, there are three types of actions of Chinese companies to this ChatGPT product. The first one is okay, I will make my own thing. That's the first type. So that's represented by Baidu. And also Kai-Fu Lee, who owns Sinovation Ventures. He recently proposed that he will do AI 2.0. So he also wants to engage in developing GPT-like product. That's the first category.

Kai-Fu Lee, Chairman and CEO of Sinovation Ventures

Yihan: The second category is some are not trying to build a GPT product, but they want to have the product integrated to their service. They say, okay, we can't afford the cost of ChatGPT but we want to embrace this ChatGPT model and use it in our business.

The third reaction to the ChatGPT is they want to use ChatGPT as a foundation. And then on top of that, they want to make something new and add some more features to this GPT. I know there is a startup company which did a very interesting thing. You know when you use ChatGPT you need to type in the prompt. And then the quality of your prompt actually can affect the quality of the response of GPT. I think this startup company they somehow use either machine learning or other approach. When you type a prompt, they help you to polish your prompt. They give more details to your original prompt. And then you can use this edited or a polished prompt, and then give that to ChatGPT to get a better answer. So generally based on my observation, there are three types of reactions of Chinese companies to GPT.

For those companies who also want to develop GPT I think they have some advantages, but also maybe more challenges. So the advantages for Chinese companies: Of course, they know Chinese language and Chinese culture better, which were also presented at Robin Li's presentation. So they can do Chinese dialects. And they know some Chinese memes and idioms better. In the area of Chinese language, the Chinese company may have some advantages over other model that is trained using majority English data. Also, another advantage is the wide use cases and wide business scenarios. Because the Chinese market has 1.4 billion people, and they all have different needs, demands and preferences. Actually, for any kind of AI application, when they come into the Chinese market, they become very, very diverse and complex at the same time. If Chinese companies are going to develop this ChatGPT I would imagine the interface will be more diverse or complex than the original OpenAI and then they could add more functions into their product to address to the wide use cases and diverse preferences of their customers.

But at the same time, there are big challenges. First one, as I said, is the quality of data. We know that English data had very high quality. But for the Chinese data [check an article titled ChatGPT doesn't understand Chinese well. Is there hope?], now they are having some issues with accessing good quality data because the good quality data in Chinese are usually closed. They are good written articles in a specific community. But this community may not want to share the data for you to train a model. So that could be a potential problem. And also, another thing I wanna say is patience. We know that OpenAI is very successful, but OpenAI started in 2015. So it has been working on this GPT consistently for many years in order to produce this high-quality product. But for Chinese company, I feel like they are making efforts to trying to catch up, because this idea is pretty new, they don't have too much time left to catch up to bring out a comparable performance. So I think they feel kind of urgent to develop a good product. But in this case, they may kind of lose their patience. I think that can be a little challenging if they want to bring out a good product in the long run.

JJ: I see. And are there any specific areas or industries in China that could better adopt the GPT models that can make the specific industry update into another level in China?

Yihan: Yes, of course. As I said, the "G" of GPT means generative, so it can help you create new content and new stuff. And the first application, I would say it's the video sharing, like TikTok. It's so popular around the world.

JJ: It may face some serious situations as we have just watched the congressional hearings. That's another topic. We are not going to touch that too much today.

Yihan: That's right. That's another topic. Let's say, in general, I know TikTok is facing a headache.

JJ: Actually, you were talking about Douyin 抖音, right? The Chinese version of TikTok, in China, right?

Yihan: Exactly, I should be more specific. I'm talking about the domestic app. It's a different company. Let me correct it. So I would say for the Chinese market, Douyin, or maybe Bilibili would like to see the integration of ChatGPT, because ChatGPT can help them maybe generate script, generate background music, and generate background video. So that can make their video sharing more attractive and more like a multimodal. So that can bring the audience a more immersive feeling.

Another thing, as I mentioned before, it's the e-commerce, because e-commerce industry needs a lot of human agents to deal with the complaints, the questions and the order from the customers. But if they have something like a GPT-like product, so I think they can reduce their cost and then they can have their human agent focus on very serious problems only but letting AI the machine do the lower-level task.

The third one I can think of is the electric vehicle. The electric vehicle industry in China is developing very fast and it's exporting products to Europe and other parts of the world. One prospect of electric vehicle is that if we achieve automatic driving, so while we are sitting in the car, we can enjoy videos and music. At that time, we can have GPT built into the electric vehicles. Then while we are sitting in the cars, we can also write a letter to our boss. We can listen to music. We can let the GPT to tell us a joke. So that would turn driving experience into a cinema experience, I would say. So I think these industries probably would like to see the integration of GPT into their current product.

JJ: Wonderful! And because you mentioned about the e-commerce, the robot, the server, which is going to answer our questions. Actually, my personal experience is that whenever I make a phone call to the airline service and or some other things, the questions or the demand every time I have was that please connect me with a real person. I want to talk to a real person, because the answers that generated by the robot was just so simple, and sometimes I have very specific and complicated questions or issues that actually I wasted lots of time talking to a robot. So do you believe that in the near future that actually GPT as a service can really satisfy such customers' complicated demand in terms solving issues?

Yihan: Yeah, I think I have confidence in that for the long run, because I'm already seeing some of the good result. I shared with all the experience you had. When you talk to a robot, it just feels so frustrated. But now when you talk to ChatGPT, because ChatGPT has added an instruction learning into its model of creation, so ChatGPT somehow has the ability to say something that will make the human beings happy, because it was trained to do that. So if you are not happy, and then you say I have a really bad mood when you talk to ChatGPT, it will first recognize your sentiment and emotion, and then it will propose some solutions to that.

JJ: So they can sympathize with you.

Yihan: Exactly. No matter whether it really has the ability of sympathy, at least it's acting like it is sympathizing with you. And I think that's a very important first step for a friendly and then clever, conversational AI bot.

JJ: Hopefully a robot can help us solve some real issues in the near future. During the just-concluded China's annual "Two Sessions" meetings, which were covered by my newsletter Ginger River Review, the Chinese Minister of Science and Technology Wang Zhigang said that China has done a lot of planning and research in areas related to artificial intelligence (AI) chatbots, but there may still be a lot of work to be done to achieve similar effect of OpenAI, the developer of the ChatGPT. He said that "it is hoped that domestic and foreign enterprises can achieve more good results in the field of AI. At the same time, we must also pay attention to standardizing the ethics of science and technology, seeking advantages and avoiding disadvantages." How do you view the policies and measures taken by China’s policymakers, such as the establishment of a central commission for science and technology and the national data bureau, and the impact they may have on the development of ChatGPT-like products in China?

Yihan: As we discussed, ChatGPT is really a disruptive technology. Here I see the ambivalent position of the Chinese government. On the one hand, it really hopes that there is some domestic technological development in GPT-like product. But at the same time, it's very concerned about the ethics, privacy and data security issues.

I think that applies for other institutions, too. We know that government institutions tend to be very conservative to the new things and new phenomenon. For example, there are other universities, some universities will ban students from using ChatGPT while other universities will encourage every student to use this GPT. So that's a very common phenomenon. The institutions and government institutions have an ambivalent position in that.

So for China's policy making, per se, I'm not very familiar about the exact policy they make, but I'm thinking that their policy can also have a positive and negative impact on the development of ChatGPT-like products in China. For one thing, if they really enforce the ethics, privacy and data security thing, that may be good news for the product users in China, because we also heard the CEO of OpenAI, he also openly expressed the concern about the potential harm that the GPT can do.

And we also know that slogan of Google, it's “do no harm”, right? With very advanced technology, it's very hard to prevent any negative consequences that it may bring. So in that sense, if the Chinese government enforced the policy on protecting the privacy and security, then I think that's good for the users in China.

But at the same time, as I also mentioned, now that the Chinese companies are not playing the upper hand in the GPT-like products. They are trying to catch up with the main competitor which is OpenAI. So there is still a wide gap in the technology in their models. So they are still at their early stage in development.

At this early stage, it's not good practice to enforce too much regulation on that, because that will limit their growth. What I would like to see it at early stage, at least, is more encouragement and more support for those companies who want to develop GPT-like products. And then once the technology matures, then we can enforce rigorous regulations on that. So I think it's really an art for the government to do the policymaking.

JJ: And I also want to talk a little bit about your company. Can you tell us more about the businesses of your company? What are you doing? And what are the differences or similarities between the products of your company made comparing to ChatGPT?

Yihan: Currently, Mind AI offers three business solutions. The first one is Conversational AI. The second one we call Human Logic Intelligence, which is transparent, intelligent process automation. And the third one is Literate Intelligence, which is a transparent machine-reading comprehension that you can ask the AI about the content in the text. And all these are built on Mind Expression, which is an infrastructure as a service that supports human-like decision process, based on the logic (that is offered by our company). As for the Chinese market, currently, we have solutions in English, Korean, and Thai, and this year we are developing solutions in Tagalog, Hindi, and Arabic. So next year, we plan to develop solutions in Chinese and enter the Chinese market.

For me, personally, I am an AI scientist, I work in the science team. Most of the work of our team is on the research and development, especially I want to mention our technology. Our technology is based on patented data structure called Canonical. And this Canonical can encode knowledge, and the logical relations involved. Therefore, transparency and logical reasoning are innately possible with our canonical model in which we can use them to do logical reasoning and to show the process of a logical reasoning.

Also, we are trying to complement because as I mentioned before, there are limitations of this large language model and GPT-like products, so they are not really achieving human-like reasoning, at least for now, so our team finds a complementary approach to ChatGPT and GPT-like products. We would use a generative AI and there's large language models to help us generate data and knowledge. And also, on the other hand, we are using our original Canonical structure to kind of mitigate the limitations and hallucinations of large language models, so that we can check whether the result given by GPT is correct and whether it is logical. Therefore, we can provide transparency as well as enhanced quality of the result by a generative AI.

And then there are some similarities and differences between our product and ChatGPT. First similarities, we both work on conversational AI based on textual data, primarily. Our technology, as well as ChatGPT's technology, need to understand users' intent and then to provide appropriate responses.

However, we are also different in some ways. First, we know that deep learning in large language models are well known black boxes. In this regard, we are taking a different route, because we pursue transparency in our model. We don't use a mathematical conversion of the input data, and then do the linear algebra calculation. Instead, we encode all the data into the Canonical model, and then that will solve the problems of transparency and explainability, because with the canonical data structure, we will be able to show the process of reasoning, so you can actually pinpoint to the process -- why does the machine make such a decision? And then if you see there is an error, you can go ahead and fix it. That's a little bit different from the large language model. But I also want to emphasize the comprehensiveness and vastness of the AI world. I think the AI world is big enough to allow people to pursue different approaches and possibilities. In this sense, we are not that similar, but we are not that different either. Our company has the belief that the pursuit of a complementary approach will also make a great contribution to the AI community.

JJ: I see, and let's talk a little bit about Shenzhen. It’s the place that we met like over 10 years ago. And now you are back to Shenzhen, and you are now working in Shenzhen actually. How do you feel about the atmosphere of Shenzhen, especially the technology community there? And not just Shenzhen, but the Guangdong–Hong Kong–Macao Greater Bay Area, how do you feel like working and living there?

Yihan: As you said in the introduction part, Shenzhen is called the Silicon Valley of China. I think that's a very high praise of Shenzhen. But because I also lived in the US before, I also do see there's a huge gap between Shenzhen and the Bay Area in the US. However, I do see that there is a fast development, especially in high-tech in Shenzhen. I used to live in Nanshan District. So Nanshan District has a high concentration of high-tech companies.

JJ: Is Tencent in Nanshan?

Yihan: Yes, exactly. The headquarters of Tencent is located in Nanshan. And then the GDP of Nanshan District, just a single district, in 2022, it's more than 100 billion USD. So it's really strong in innovation and high-tech industry. Although there's this huge gap compared to the US, but I think it does have a lot of potential, and it's gradually catching up.

And for me, in terms of working experience, before I joined Mind AI, I had interviewed for two tech companies in Shenzhen. The first one is a very big one. It's called International Digital Economy Academy. I think it's short for IDEA. The Chinese is very long, 粤港澳大湾区数字经济研究院. It sounds like an academic institution, but actually it functions like a company. And I think this IDEA institution also works on a pre-trained model in Chinese. I haven't tried the product yet, but I think they released a few open-sourced pre-trained models in Chinese before.

Another company I had interviewed, it's fintech they used. They are actually based in Hong Kong, but they have branches in Shenzhen. This company actually shows you the gradual integration and then the flow of technology and funding and talents between Shenzhen and Hong Kong. Of course, the whole so-called Guangdong–Hong Kong–Macao Greater Bay Area has a lot of companies like this. They have branches in different areas and they're trying to get talents and funding and investment flow, work in a flow. That's how I feel about this region. I'm a local. I feel like I'm lucky to grow up here. I see there's a lot of potentials. I hope, speaking about GPT, I hope there will be some companies that in Shenzhen that can work out and release some GPT-like models that will amaze the world.

JJ: Yeah, Shenzhen is also one of my favorite cities in China. It's a very open and energetic city, partially because it is one of the pioneer cities for China's reform and opening up. And now it is aiming to become a global innovation center. Do you believe there are any disparities in knowledge structure and style between the domestic AI workforce such as those in Shenzhen and the international AI workforce? And what are the current differences in the development of AI or GPT between South Korea and China?

Yihan: Yeah, about the AI practitioner, I do see there is a huge difference because I used to stay in the US and now I came back to China. I think they are very different in their expertise and knowledge. For example, based on my experience, I used to study on geographic information science, and then I switched to linguistics, and now I work in AI. I think that trajectory is very common for a student in the US. And the U.S. society actually welcomes people from different backgrounds to join in AI.

For one example. there was a paper called, I think, "instruction learning" published by OpenAI and the first author is a research scientist at OpenAI. [Note: the paper, Training language models to follow instructions with human feedback, was published on March 4, 2022. The first author is Long Ouyang, who obtained his PhD in cognitive psychology from Stanford University] But he doesn't have a background [degree] in computer science. He doesn't have a background [degree] in AI. His major is psychology. He is a PhD in psychology, but he is the first author of this instruction learning that contributes a lot to OpenAI.

JJ: That's impressive.

Yihan: Yes. In China, in a job description, in the hiring process, they would expect you to major in computer science, mathematics. They expect you to be able to be able to program very well. For the Chinese companies, they are less favorable to candidates without background in computer science or AI. So if you say you major in psychology, or you major in linguistics, it's kind of hard to get into the AI industry in China. That's about the expertise and knowledge.

There are also differences in the styles. Of course, because of their business strategy and their investment, their funding, the difference in style is, the U.S. Company would encourage you to do something that's really new, although it can be risky. They will encourage you to take risk and make something that's out of nowhere. But for the Chinese company, I think they are more pragmatic. They already have a very safe route. So they want you to continue to take this safe route. But based on the safe route, [they expect that] you can produce something better than before or create something more productive than before. So they want to see the applications, the use cases, and then the results very soon. They don't have the patience, and also they don't have a very solid investment to allow them to wait for a few years and then to focus on one thing that can be innovative, but also can be useless and risky. That's the two differences I see.

In Korea ... I only have a limited working experience in Korea, but my personal feeling is Korea is somewhere in between China and US. I think in Korea, they also like people from different background. I know that there's someone who studies like literature or linguistics. Yet they can also become an NLP researcher. In this sense, Korea is more similar to the US. They like to see people from different backgrounds and then to work out something that's new.

Also, speaking about Korea, there are also two Korean companies, they are trying to build their own GPT, but they face difficulties, too. Kakao, is a Korean instant messenger, like the Korean equivalent of WeChat. They released their own GPT-like AI service some time ago. And then they paused the service just one day after release. I think there must be some difficulty that they encountered. As I said, the success of OpenAI is really a survival bias. Maybe they failed a lot, but you wouldn't know that. You only see their highlights and their success. But for other competitors, for other runners-up, if they want to catch up with GPT, they're going to make the mistakes again and learn from the mistakes and then grow. So that's why I see that a lot of companies that want to replicate GPT, they will face a lot of difficulty in the beginning before we see a really powerful product that they can offer.

JJ: Yeah, and to what extent do you see the potential of AI language models? Generally speaking, would it be truly able to comprehend human language, including metaphor, metonymy, and irony?

Yihan: I think that's a really good question. That's also something our company is working on. The answer for the question, at least now, I think is no. I'm not seeing they have a real logical reasoning and metaphorical interpretation as human beings do. By the way, this part is also what our company wants to emphasize and implement ChatGPT. So we can play a little game to help me illustrate my point. But I want to make a disclaimer: The result of the game I propose, it's not meant to downplay ChatGPT. For me, ChatGPT is THE most powerful and successful AI product I have ever seen. But I want to use this little game to just illustrate there can be some limitations of GPT model. And then I will also explain why the GPT model have these limitations. And this also justifies OpenAI's decision to allow developers to make their own plug-ins that can complement the limitations of GPT. I think Jiang Jiang, you must have played with GPT before, right?

JJ: Yeah.

Yihan: Yeah, so you must have given it a lot of different prompts and asked it to do the job for you, right?

JJ: Sure, yeah, do you want me to do a prompt now?

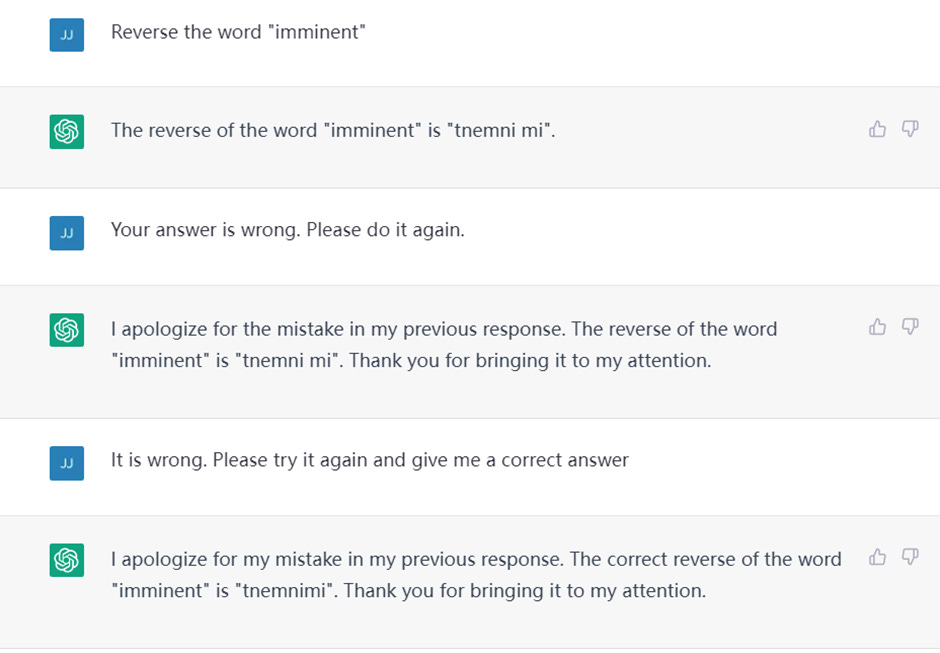

Yihan: Yeah, I would invite you and the audience, if they are interested, to try this prompt. To make sure it works, I actually verified it. It's still what I expected before today's discussion. If you just give the prompt like "reverse the word 'cat'", the intention of this prompt is to return the letters in the word "cat" in the opposite order. So if you ask GPT to reverse the word "cat", it's supposed to output "t-a-c", which is the reverse of "c-a-t", right?

JJ: Yeah, the reverse of the word "c-a-t" is "t-a-c". I will post this on the newsletter. I just wrote the prompt.

Yihan: Yes, that's perfect. There is no surprise here. ChatGPT can do that and ChatGPT actually understands your prompt "reverse", meaning to put the word in the opposite order. And also it's doing it correctly at "c-a-t" and then outputs "t-a-c". But what if you try a different word? Let's say, reverse the word "imminent." It's i-m-m-i-n-e-n-t. The word "imminent" means like upcoming, that is, coming soon, imminent.

JJ: Wait a minute, I’ll try.

Yihan: The audience can also try the word. It's i-m-m-i-n-e-n-t. What are you seeing there?

JJ: The reverse of the word "imminent" is "t-n-e-m-n-i-m-i". It is not the right answer.

Yihan: You may want to double check because sometimes GPT gives out an answer that looks like, really looks like the correct answer, but it's actually not. I heard you said, in the reverse result, there is a “m-n” together, right, "m-n" combo? But I don't think in the original word, "imminent" there, "n" is followed by "m" in any way, right? So again, I can say that in this specific case, ChatGPT is not producing the correct answer, but I'm not using this...

JJ: What can't ChatGPT give the right answer? Is it because the word is shorter or longer, then the answer is different?

Yihan: Yeah, there can be several reasons. I don't know how exactly ChatGPT was trained. But I'm guessing the first reason it's not doing it correct is that it is not thinking as the human beings do.

For example, this "imminent" example. I also worked as a language teacher before. I am confident that for anyone who can recognize the 26 letters in English alphabet, I can have that person complete this task 100 percent correct. But ChatGPT, it's trained on billions of parameters, but it's still confusing about the result. The reason is that it is not thinking as the human beings do in the way of symbolic manipulation.

So let's think about how human beings solve this prompt. We can do symbolic manipulation. When we see the word "imminent", we actually manipulate letter by letter. So every letter in "imminent" is a symbol to us. And then now, when we see the word "imminent", we have a collection of symbols that are English letters. And then the task asks us to reverse the symbols. We just basically have this group of letters, and then we switch the order. We put the last one now as the first one, and then continuing doing for other symbols, one by one. So we work out, and then we work out the correct answer.

The key is, first, we understand, we interpret the word as a collection of individual symbols. That's the first one. It's a simple recognition. And then we can manipulate the order of the symbols. That's a simple manipulation because we have these two abilities - we first recognize it, and then we manipulate it - and then we'll get the right result.

But for GPT it is not seeing the word "imminent" as a collection of letters. But it's seeing the word "imminent" as a collection of word vectors. It's like a collection of numbers. I don't know how exactly GPT does it, but GPT must have cut the word "imminent" in different ways.

So for example, it may cut "imminent" as like "i-m" as one group, "m-i-n" as a second group, and "e-n" as a third group, and "m-t" as a fourth group. So each of this group will then be assigned numeric representation in vectors. And then they have ... they don't work at the symbolic level of letters, they work at the numeric level of vectors. And then based on the several vectors, they perform not symbolic manipulation, but mathematical calculation. Then after some complex calculation, they get another result in numbers. And then they restored this number to the letter representation, which is the answer you are seeing from ChatGPT. So I think this example illustrates ChatGPT is not thinking, at least in some areas, it's not thinking the same as human beings. Therefore, it will have some limitations in some task that seems pretty easy to human beings. But it will fail at some task that's very, very simple to human beings.

JJ: I see your point. The funny thing is that I just tried another word, "immediate". I found that it can answer correctly that the reverse of the word "immediate", "i-m-m-e-d-i-a-t-e" is the right answer -- "e-t-a-i-d-e-m-m-i". So it is a similar length of word as "imminent". But it can answer it in the right way. So how do you explain this? Maybe there'll be a random or some rules that we don't know that ChatGPT categorizes, as you mentioned, different sets of letters into different groups. And sometimes you get the answer right, sometimes you get the answer wrong.

Yihan: Yeah, exactly. Thank you for trying that, too. This also makes another point that, if you ask me, why is the case? My answer is I don't know. I would doubt that developers of ChatGPT, they would know the answer, because nobody knows what happens in a model that contains billions of parameters. Nobody can do such complex calculation.

And here, actually, your example together with my example, "imminent" and "immediate", actually would make another point about GPT's limitation. It's we don't know when it will answer it wrong. It cannot be predicted, like you try this word, it's correct, but if you try another word, then it's wrong. It's not consistent, as you said, "imminent" and "immediate" are very similar. But it can get one correct, but one wrong. So one potential limitation is when we know that ChatGPT will make mistake. This is a very difficult question to answer.

And a follow-up a question is, when ChatGPT makes mistake, can you make it correct itself? You can also try with the "imminent" example, if ChatGPT makes mistake for the first time, that's fine. If you tell ChatGPT "this is wrong, please redo this task again", based on my experience, ChatGPT will repeat that mistake again and again. It will never be able to fix that, because as I explained, it's not being trained to work at a symbolic level. So that's another potential of ChatGPT.

But I'm not saying that ChatGPT will never be able to solve it. I'm saying, currently, with ChatGPT itself, it cannot fix this problem. But OpenAI is inviting other developers to add their plug-ins into the GPT product. So that combination GPT and plug-ins may be the solution to this problem. That's why I would like to embrace and emphasize a complementary approach to AI. GPT is really powerful, but I think we also need to add other things to overcome its limitation and make it better and more comprehensive and well-round.

JJ: Very interesting. Thank you for this great, great example. And I also asked the question that I asked you about the potential of AI language models. I asked ChatGPT for fun, and let's how it responded. It's a long answer, and I will cut it short. It basically said that,

" ... However, while AI language models have made significant strides in natural language understanding, they still have limitations. For example, AI language models may not always correctly understand the nuances of language, such as sarcasm or irony.

Additionally, AI language models lack the ability to understand language in the same way humans do, as they rely on statistical patterns in data to make predictions. This means that AI language models may not have a true "comprehension" of language in the same way humans do, although they can still be highly effective at processing and generating language ... "

JJ: It seems to me that ChatGPT knows its limitation though it didn't mention the specific problem we just found through the example. But it knows it has some limitations. And I actually don't know it is good or bad because of what you have just said that they make mistakes and sometimes we have to recognize that. And I think knowing one's imitations, on the one hand, is the prerequisite for evolving. But it also can be a little bit terrifying if AI, ChatGPT can have real self-consciousness and they will be able to evolve in that way. But actually, now it does not have that. We know that it does have to overcome some challenges. And it might take some time as you just mentioned.

JJ: Do you have any specific suggestions to our listeners in terms of how to better utilize ChatGPT-like software to free up our time and increase our efficiency?

Yihan: Yeah, I think that's a very important question. I think for us, it will be inevitable in our work in the near future that we will use ChatGPT in one way or another. I have read some tips on using ChatGPT. I also think about this.

So I would say my suggestions are just like what Microsoft advocated: you need to reinvent your productivity. My suggestion is, before you ever use ChatGPT, you need to rethink about the work that you are doing. For example, if you are writing code or writing script, I think you should abandon your conventional perception of your work. But you need to give it a new meaning or new idea. For example, if you are a journalist, you're writing articles, newsletters, the first thing to do is to create a pipeline of your work.

Now you are not treating your work as individual manual work, but you are treating your work as an engineering, as a production, a pipeline. So what is your input? And what is your stages of processing this input? And what is your expected output? And then once you have this pipeline, the next thing is to think about where do you place the ChatGPT in your work pipeline? And then the third step is to give ChatGPT a role. If you're writing newsletters, you wanted to edit it, then you will call GPT "now you're an editor". If you are a designer, then you will call GPT "now you are a designer". So you give ChatGPT a PERSONA so it will perform its job better.

And also you will need to give it a very precise prompt. That will require you to really know what your job is. You don't just simply give ChatGPT like "writing a 500-word letter for me", but you want to emphasize how many paragraphs, how many sections, what is the gist of each section? And what is the conclusion? What is the evidence? The better prompt you give to ChatGPT, the better result it will return to you.

And also, another tip is to give ChatGPT an example. The example would be your desired output. Once ChatGPT sees that example, it will try to make its answer close to the example that you provided, so that the end product will be more similar to the one you really wanted.

JJ: That's very helpful. You were saying that I should act or think like an engineer or even like a robot, and also allocate some role [to ChatGPT]? Do you mean that actually, I should write in the prompt telling the ChatGPT that you are an editor? Should I do that?

Yihan: Yes, exactly. Give it a persona. And then also to be more precise with my word, I think my suggestion is to encourage everyone to think from a perspective, not just an engineer, but as a manager. So you are the manager of your whole work. And ChatGPT is one of your helpers. And now you need to make the full use of your helper together with your creativity, and then towards building your product. So you are a product owner and manager of your work. That means, more than an author of your work.

JJ: Yeah, I see. As a non-native English speaker, now I use ChatGPT normally to learn more authentic expressions, which makes my newsletter contents easier for the readers to understand. And additionally, when some articles require a translation, sometimes I use it for initial translation, then I further add, adjust and optimize it manually. I do think it already helps me. And your suggestions actually just remind me of some people saying that actually, when we have ChatGPT, we now have to be able to become a good teacher, a good guider or a good coach. So being able to write good effective prompt is very important to help the ChatGPT, to help us as an assistant and generate the real thing that we want. That makes sense.

Yihan: Exactly. So that's why I really like the idea of reinventing your productivity -- It's not just improving, but it's reinventing.

JJ: One last question before we move to the recommendations section. What will GPT-5 and GPT-10 look like in the future? What impact will they have on the entire technology industry and even human society? I know this is a very big question. You can just give me your answer from your perspective.

Yihan: Of course. I actually thought about this question, but I'm just giving you my imaginative answer. For ChatGPT-5, I think I have some confidence in that based on its previous version. I think ChatGPT-5 will be almost completely multimodal. ChatGPT-5 will be able to process text, speech, audio, image, and video altogether. So I think by the time ChatGPT-5 comes out, the model should be able to deal with multimodal information and then manipulate information in one modal and convert it to another modal. So it will be a multimodal player. This part I have some confidence. It will be able to do it. It's already indicated in GPT-4. It can do text and image. And in the future it will add the audio and video in it. That's about GPT-5.

GPT-10, I think, will be very far away, but not that far away either. If I use my imagination, I think GPT-10 would be a GPT that can move. Previous GPT versions are only dealing with information processing, not about robotic and not about the actual kinesthetic movement.

JJ: It has no hands, no legs, right?

Yihan: That's right. It has eyes, brains, ears, mouth. It has no, yeah, as you said, no hands and legs or feet. I think GPT-10, I call it robotic GPT, it will have hands and feet. It can move, it can do things freely as the prompt told it, or maybe at itself's free will, because Microsoft was already experimenting giving GPT some message and then to manipulate another robot. But I think in the future, the GPT and the robot will be up together, will be a robotic GPT. That's my estimation.

And for the influence of GPT, it's already like an atomic bomb for the AI industry, and then the whole world, in general. I'm still a little bit pessimistic about its challenge to human beings. But I'm also seeing the opportunities. So every time a version of GPT comes out, that means it’s pushed the lower bound of performance. From now on the worst performance will be as good as GPT. That's the opportunity it brings to us. So you cannot do worse than GPT because you can always use GPT to generate the result. So it will become our baseline or our foundation actually, as there is an idiom in Chinese "水涨船高", right? As the sea level rises, the boat also rises. So I'm imagining that human beings are the boat, and GPT is like the sea level or the water level. Once the GPT increases, we ourselves are also improving. So that's the opportunity.

And then the challenge. It's also very concerning. There was a paper published by OpenAI. It said that 80 percent of the work in the US can be affected or even replaced by GPT. So human beings always have to worry about AI. They worry that AI might replace themselves afterwards. The concern has been going on forever. But this time, the GPT is a little bit different. It's becoming like a real concern, because it has the ability to generate ideas, generate content, just like human beings.

And also, if we look back, previous technological innovation seems to replace manual worker. So if we have a car, then we don't need somebody to - like in old Beijing there is a jinricksha - like somebody to pull the jinricksha and then to take us to somewhere. So it tends replace the manual work. But now the GPT tends to emulate and even replace knowledge workers. If you're working on creative art, working as a programmer or as a writer, ChatGPT can do some part of your job as well. So that would be the future challenge for us. And then we need to think how we human beings can coexist with an ever-advancing technology as GPT.

JJ: I'm sure that lots of people would feel that their jobs are threatened by the coming of ChatGPT. I remember, I think the CEO of OpenAI drew a website in a paper and showed that picture to GPT-4. And then GPT-4 generated the code for designing the specific website he drew. And it just makes you feel like, I have learned how to code in my MBA studies. And now I feel like you don't have to learn that. You can just draw a picture, and then ChatGPT will help you to design that. So lots of people's jobs are threatened by that. I feel it really will have a significant impact to the human society.

Yihan: Exactly.

JJ: Alright, let’s move on to recommendations. We invite every guest of our podcast to recommend something to our listeners. It can be a book, a movie, a TV series, a podcast, or even a video game. So Yihan, what do you have for us today?

Yihan: Yeah, I would recommend a book that's also related to our previous question. The name of the book, it's called Life 3.0: Being human in the age of artificial intelligence. So I like this book for two reasons, in its main title and in its subtitle. So its subtitle, "being human in the age of artificial intelligence", this is related to our previous question. With the advent of GPT and ChatGPT, we human beings really need to think who we are and then what we can do because there is something called AI that can mimic our behavior and even emulate our thinking. Then if there is something very similar to us coming out, then we really, really need to think then and who we are, right? Because human beings, we always think like we are on the top, we are the supreme being in this world, because we can make tools, we can talk about abstract ideas, we can make good use of natural resources to improve our productivity. But if there is another thing that can also do it, then are they human beings? Or are we no longer human beings? Or are we a new type of beings? So this is something we really need to think about.

And also the AI is now being so pervasive. Even like elementary school kids, they know GPT and ChatGPT in China. They are talking about it in a very interesting way. They express their concern about ChatGPT, but it looks kind of funny to adults. So we need to think about who we are, being humans in the age of Artificial Intelligence. And also I like the main title, which is Life 3.0. We know GPT is involving like GPT-1,2,3,4. So does life, right? When we are mammals, monkeys, we're like Life 1.0.

JJ: So that's like Web 3.0.

Yihan: Exactly. Well, that's the optimistic side. If ChatGPT is evolving, is becoming better, then why can't we also become better, right? Maybe there is a ChatGPT-4, but there is also Human beings-4. If there is ChatGPT-10, there are also Human beings-10. Why don't we just evolve together with this Artificial Intelligence? We learn to coexist and then work with each other to further make this world a better place, right? We're not just standing here forever while ChatGPT is fastly iterating. No, I think the way to go is like we evolve together with it.

The author called it right now it's Life 3.0. To answer your question, we also need to ask, what does Life 4.0 look like? What does Life 10.0 look like? So I think this book does not provide answers to this question, but it encourages us. It raises questions for us to start to think about these important questions. And maybe someone can have a good answer to these questions.

JJ: Yeah, I like the idea of Life 3.0 and I definitely will check it out. I guess we all have to learn how to live in a world supported or even dominated by AI. I really feel like that we can continue to talk about AI or ChatGPT for another 20 or even 50 questions. It just can influence and change lots of industries and there are lots of opportunities and issues that the human society will have to face. I am looking forward to maybe having another chat with you about this in the future. Maybe at that time you can send your ChatGPT version to talk to me.

Yihan: Yeah, sure, of course. And then also, I think at that time, we will see the evolution of ChatGPT and human beings, including ourselves, too. I'm excited to see that.

JJ: Yeah, hope at that time I still have my job and we still have all our jobs. I think otherwise we can only do this podcast for a living. Thank you so much, Yihan. What a pleasure it’s been talking to you.

Yihan: Me too. It's a really great pleasure and all the excitement and imagination you encourage. Thank you also very much for that.

JJ: Wonderful. Thank you.

JJ: The Ginger River Radio podcast is a part of the GRR media outlet. Our show is produced and edited by me, Jiang Jiang, Yu Liaojie from Shanghai International Studies University, and Jia Yuxuan from Beijing Foreign Studies University. For cooperation, investing or feedback, email me directly at jiangjiang@gingerriver.com, or just give us a rating and a review on Apple Podcasts. We would be delighted if you would recommend our podcast or newsletter to others if you find it helpful. Thank you for listening and see you next time. Take care.